What is Crawl Budget and Why Should We Optimize It Correctly For Google?

What is the Crawl Budget and Why Should We Optimize It Correctly For Google?

When we get down to work to improve our SEO, aspects such as the use of keywords, links and other factors always come to mind, forgetting about the protagonist of today’s post: the crawl budget.

And it is that, in short, the first thing we usually think about is elements related to the search intention, leaving aside very important aspects such as the optimization of the crawl budget that the search engines assign us.

Being an SEO aspect in which many of us sometimes do not realize, it is important that we remember that, if for our website or blog to be well positioned in Google, we must optimize our content precisely for the robots of this search engine, which will be the ones who index and crawl.

Contents

But since this is a somewhat technical concept and not too well known, especially by professionals who are just starting out, I would like to define for you precisely what the “crawl budget” is about.

What is a Crawler?

Before you know what the “crawl budget” is, I think it is important to define what a “crawler” is, so that you understand everything better.

The crawler is nothing more than software, whose main task is to explore and analyze all the Webs and their corresponding URL’s automatically.

Sometimes, within the field of SEO, you will hear it under the name of “spider”.

What is the Crawl Budget?

The ” Crawl Budget ” is the crawl time budget that the Google crawler or spider invests in crawling a specific website, through which it analyzes all the content present on it.

When I say all the content, I mean everything that is under that Internet domain: images, texts, internal links, videos, etc.

Therefore, you can deduce from this definition that it is basically the amount of time that Google spends on your website or blog.

That being said, you may be wondering what is involved in terms of SEO if this amount of time is more or less, right?

The truth is that, although a few years ago it was given much more importance than it is today, having a high “crawl budget” is considered a positive aspect for the SEO of a Web page.

If you are still not clear with this term, I will analyze it in more detail below:

Why do you assign crawl budgets to websites?

Probably, although you have understood its meaning, I will explain to you the meaning of the allocation of this tracking time.

It is simply because Google does not have unlimited resources and, given the number of sites on the Internet today (and those that are created every day), it cannot keep the attention on the same Webs.

Therefore, you should allocate your productive time according to this criteria, so that your “spiders” have clear guidelines as to how much each of these places is worth staying.

In other words, the crawl budget will set the priorities when visiting all these pages.

First of all, I must tell you, for your peace of mind, that this SEO factor, sometimes so unknown to many people, acquires a greater role in large sites with a large number of URLs.

These can be of the type Online stores or marketplaces with many years of professional experience.

Therefore, if you have a blog or corporate website only a few months old, you shouldn’t have too many reasons to worry about it.

Why is It So Important For Seo to Have a High Crawl Budget?

We previously said that the ideal for a site is that this measurement factor on the Internet should be as high as possible, so that it benefits our organic positioning.

Some of the reasons are:

» It will index our content faster

Bearing in mind that we have a blog where we periodically publish new content, it is clear that we want Google Bot to position it as soon as possible.

From there, we give the search engine the possibility to analyze our content, value it and, consequently, give us a better or worse SEO Positioning for the keywords that we have worked on.

However, if the crawl budget is low, it is very likely that it will leave our website before it manages to index this new content.

For this reason, by not indexing it in the present search, we will have to wait for it to happen on the next occasion to be able to position the content of our interest.

» Prevent it from indexing copied content before ours (original)

Another of the reasons why we are interested in increasing this factor is a consequence that, on more than one occasion, has upset Digital Marketing professionals.

It is about duplicate content, something that none of the professionals who generate original articles of a certain quality on a weekly basis want to suffer.

For this reason, if we suppose that we have a blog in which we have created a post that adds a lot of value and has been worked on and, because it has a low crawl time, the Google Bot has not detected it, another website can copy it for us.

Thus, if the “plagiarist” Web is lucky enough to be indexed by Google, its URL will be the first indexed for this content.

Therefore, when you go through our content, when you see that it is identical to the previous one, you will understand that ours is the duplicate.

How Can I Find Out What the Crawl Budget is for My Website Or Blog?

It is normal that, taking into account all of the above, you want to know the time that the search engine spider spends in crawling your content.

For I will show you how to do it, since it is very simple, fast and intuitive:

1. Register your website in Search Console

The first thing you should do is register your domain on the “Google Search Console” platform.

This should be a mandatory step whenever you start a project on the Internet. If you already have access, go directly to the next step.

2. Open tracking options

Log in to your account and go to the option « Tracking > Tracking Statistics ». Right here you will see the screen where, graphically, you will be able to know these times:

How Can I Optimize the Crawl Budget of my Page?

Once we have all the tools to know the most important data, the next “almost obligatory” step will be to optimize it, since in most cases, the crawl budget can be improved.

For this reason, we must attack all the URL’s of our site that contain errors that do not add real value to our project and, therefore, give signals to Google that it is not worth investing too much crawl budget in it.

1. Avoid cannibalization

As you could already understand in a previous post on this blog where I told you about the importance of keyword cannibalization, this is basically an evil that affects two contents of the same website that point to the same search intention.

For this reason, in addition to being a reason for a penalty or a drop in search rankings, if you have 2 URLs that serve the same ‘query’, the crawler must crawl both pages.

This will cause you to lose that time interval (no matter how small) in going through both, when as long as you go through one of them, it would be enough for Google to know that you want to position the keywords of that content.

2. Not indexing user searches

Normally, whether you have a corporate website, blog or eCommerce, it is common to have an internal search engine, so that our readers can locate the desired content.

The problem is when we allow indexing of those searches. This would mean that the Google Bot has to spend more time exploring that number of searches performed.

- These are of the type: https://www.mexseo.info/?s=Quality+Backlink+Strategy&button=Submit (giving the example that the search has been “Quality Backlink Strategy”).

3. Do not index the URL’s of 3XX or 4XX errors

In the same way as the previous example, it may be that, depending on the configuration of your site, you have an option enabled that causes all those page not found errors, bad redirects, etc. to be indexed.

This will also cause the number of total pages of your domain to increase significantly and, therefore, the total crawl budget.

Otherwise, what sense would it make to index URL’s of this type?

4. Do not generate content “by weight”

There are sites that generate a massive and/or abusive amount of content, just for having something to post every day.

In most of these cases, a large percentage of these are of little value or without claims to be positioned and are only created just to have something to share with their supposed audience.

Each one of them will generate a URL that, added together, will result in a large number of URLs that will obviously make Google Bot “play” too long, causing its crawl budget to increase unnecessarily.

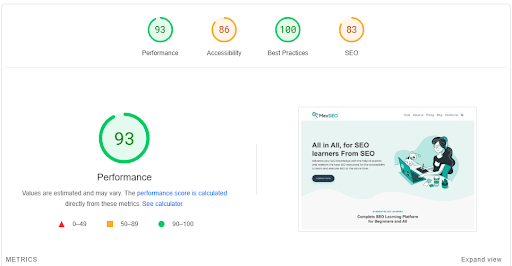

5. Optimize your loading speed

It is clear that, to save URL’s, Google crawler reading time and, ultimately, make things easier for you, the loading speed of each of your pages plays an important role.

For this reason, you must ensure that each and every one of your URLs has the lowest loading speed possible, optimizing each of the factors necessary to have an optimal WPO (Web Performance Optimization).

So that you can have a reference of this factor, any page that exceeds 3 seconds is considered “slow”, although obviously, the more we manage to get below this figure, the better.

6. Coherent link structure

In the same way, another of the factors that will seriously increase this factor that I am talking about today is the fact of having an internal link strategy that is somewhat irregular or makes little sense.

This is because, in our attempt to add as many internal links as possible to our content to improve our SEO, we can cause the opposite effect, by implementing them without any criteria or strategy.

How to Increase the Crawl Budget of my Website?

In the same way that we can fix some of the errors described above, we can also boost our crawl time, so that it increases.

These are some of the actions that I recommend:

1. Publish content periodically

If Google is what it is today, it is obviously because it is made based on a very well created search algorithm and designed so that only those who are truly the best are in the TOP10 of the SERP.

Therefore, if it detects that your publication rate is irregular or that, to make matters worse, your content is quite old and you have not updated or improved it for a long time, it will be difficult for you to increase your crawl budget.

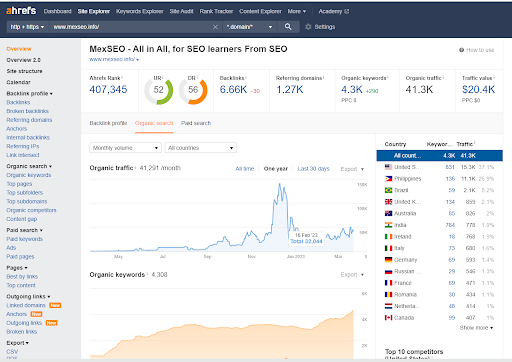

2. Increase your authority

You will surely be familiar with the famous domain authority(DA) metric that, until recently, was used in SEO to measure, in a certain way, the “quality” that MOZ gave to a website.

To this day, I personally rely much more on the authority that tools like Ahrefs tell us.

And one of the ways to achieve this is by having a good Link Building strategy.

In this way, if we get a large number of quality backlinks to our domain, we will be able to signal to Google that we “deserve” them to spend more time on our site.

Conclusion

As you have been able to verify, there are many factors that make the crawl budget of your website not the most optimal.

In addition, as I have shown you, the fact of being able to increase this temporary budget makes Google consider us more and, therefore, each one of the URLs of our entire domain.